How GPT/ChatGPT Work - An Understandable Introduction to the Technology - Professor Harry Surden

Last updated: May 6, 2023

Artificial intelligence (AI) is a way of using computers to solve problems and make automated decisions in tasks that require high-level thinking.

Generative AI, a specific type of AI, focuses on creating human-like outputs such as music, art, and text.

Generative pre-trained transformer (GPT) is an example of generative AI that can generate high-quality text based on all the texts that came before it.

GPT, which uses word vectors, neural networks, and attention mechanisms, was developed by OpenAI.

Pre-training, the process of teaching the AI patterns of human thought and language, and fine-tuning, the process of training the AI on specific tasks, are crucial steps in using GPT.

The OpenAI Playground allows experimenting with old AI models, including GPT-3, which was the first AI model that could generate lifelike text.

- AI uses computers to solve problems and make automated decisions

- Generative AI focuses on creating human-like outputs such as music, art, and text

- GPT is an AI text generator that uses word vectors, neural networks, and attention mechanisms

- Pre-training teaches the AI patterns of human thought and language, while fine-tuning trains the AI on specific tasks

- The OpenAI Playground allows experimenting with old AI models, including GPT-3

- GPT-3 was the first AI model that could generate lifelike text

- GPT/ChatGPT uses AI to generate text and make predictions.

- Word vectors and embeddings give meaning to words mathematically.

- GPT/ChatGPT can handle reasoning and generate complex documents.

- GPT/ChatGPT can generate biased text and may contain mistakes.

- It is trained using neural networks and deep learning.

- It can be fine-tuned for specific tasks.

What is generative AI? 01:33

- There is no agreed-upon definition of artificial intelligence (AI).

- AI is using computers to solve problems, make predictions, answer questions, or make automated decisions or actions in tasks that engage higher-order cognitive activities.

- Generative AI is a specific set of AI focused on creating creative outputs that are generally associated with humans, such as art, music, and text.

- Generative AI can create high-quality art, music, and text using AI-enhanced tools that can generate anything you want.

Generative Text 04:56

- Generative text is the biggest breakthrough in AI that is going to change our society.

- GPT is an automated text generator created by OpenAI that can make stories, poems, articles, blog content in the style of people.

- GPT generates text one word at a time based upon all the other texts that came before it and what you've pasted.

- GPT is considered generative because it's generating texts.

- Generative pre-trained Transformer (GPT) is a large language model used for generative text created by OpenAI.

- Pre-trained means they took a huge portion of the internet, actual books, most of the laws, and set the algorithm loose on vast quantities of text to learn the patterns of reasoning and how language works.

- GPT learned a huge number of patterns about reasoning and how language works during the pre-training process.

- It is an expensive process of getting the AI to learn patterns of human thought and language.

- Large language models (LLM) is another word for GPT.

Understanding GPT 05:19

- GPT stands for Generative Pre-trained Transformer.

- GPT was originally developed by OpenAI as an automated text generator.

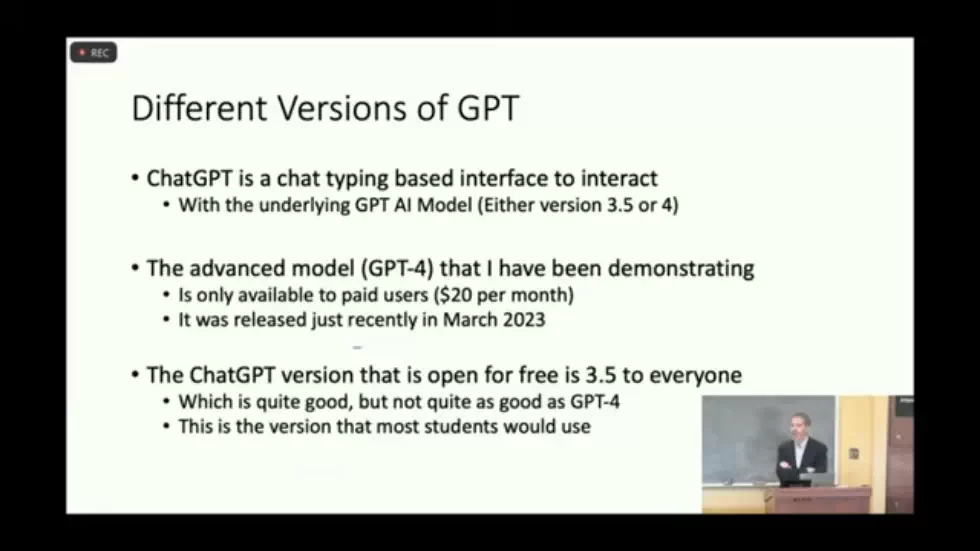

- Chat GPT is the chat-based interface to the actual underlying technology called GPT.

- Chat GPT is not the only way you can interface with GPT.

- GPT can generate many amazing things beyond poems.

- GPT generates text based on the patterns it learned from pre-training on vast quantities of texts.

The Process of Pre-Training 07:42

- Pre-training is one of the magic pieces of GPT.

- Pre-training is a process where they took a vast quantity of text from the internet, actual books, and laws and fed them to the GPT algorithm.

- GPT set loose on vast quantities of text learned a lot of patterns about reasoning and how language works during pre-training.

- Pre-training is an expensive process of getting the AI to learn patterns of human thought and language.

The Components of GPT 07:44

- GPT is composed of three components: Word vectors, Neural networks, and Attention Mechanism.

- Word vectors are a mathematical way to represent words in a text.

- Neural networks are a series of algorithms designed to recognize patterns.

- The Attention mechanism is the ability of the model to focus on specific parts of the input text.

Word Vectors 07:43

- Word vectors are a mathematical way to represent words in a text.

- Word vectors represent the relationship between words in a text.

Neural Networks 07:45

- Neural networks are a series of algorithms designed to recognize patterns.

- Neural networks are used to analyze the patterns in word vectors and generate text.

Attention Mechanism 07:47

- The attention mechanism is the ability of the model to focus on specific parts of the input text.

- The attention mechanism is used to generate appropriate responses to specific inputs.

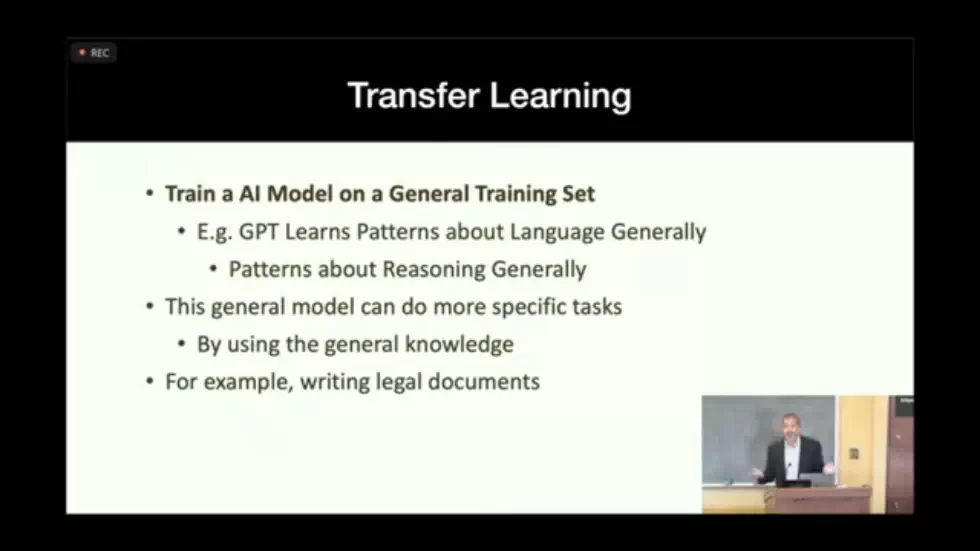

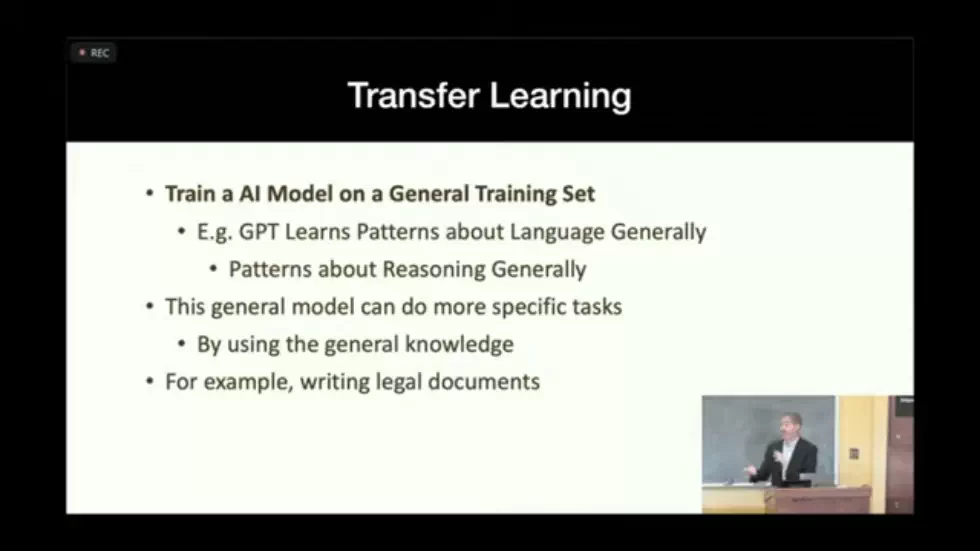

Transfer Learning 07:47

- Transfer learning is the process of transferring knowledge from one model to another.

- GPT uses transfer learning to improve its performance.

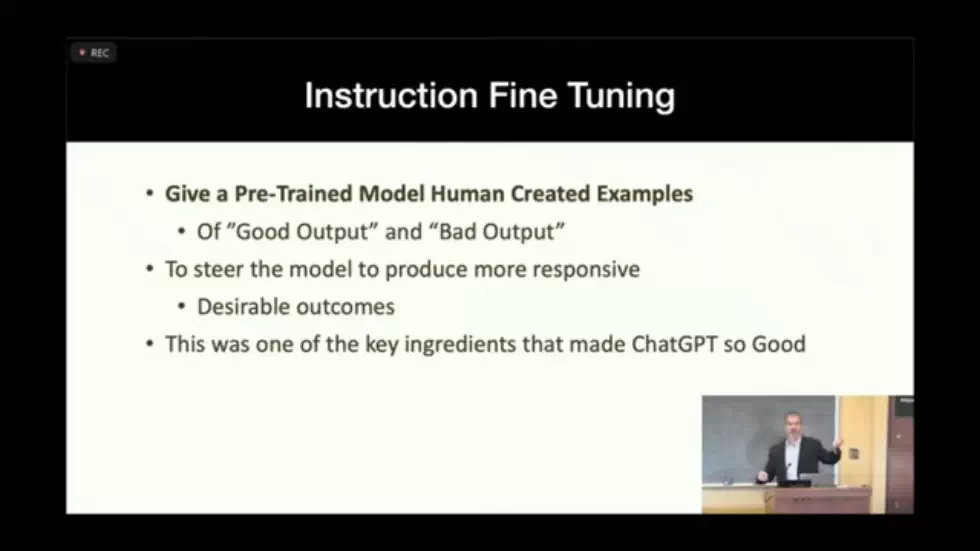

Fine Tuning 07:50

- Fine-tuning is the process of fine-tuning the pre-trained GPT model to a specific task by training it on a smaller dataset.

- Fine-tuning is used to improve the model's performance on specific tasks.

- Fine-tuning is a crucial step in the process of using GPT for specific tasks.

GPT and Transformer Architecture 08:29

- GPT stands for Generative Pre-trained Transformer, which is a large language model.

- It uses vast quantities of language during training to learn different patterns of thought or language.

- The Transformer is an architecture that was invented by Google in 2017, also known as the attention mechanism.

- It taught something like GPT to understand the context of words that are being asked around it.

- Previously, these models couldn't understand what you were asking it.

- This was one of the big breakthroughs that allowed these models to be responsive and actually understand.

- The Transformer is a particular architecture or way of doing something called deep learning.

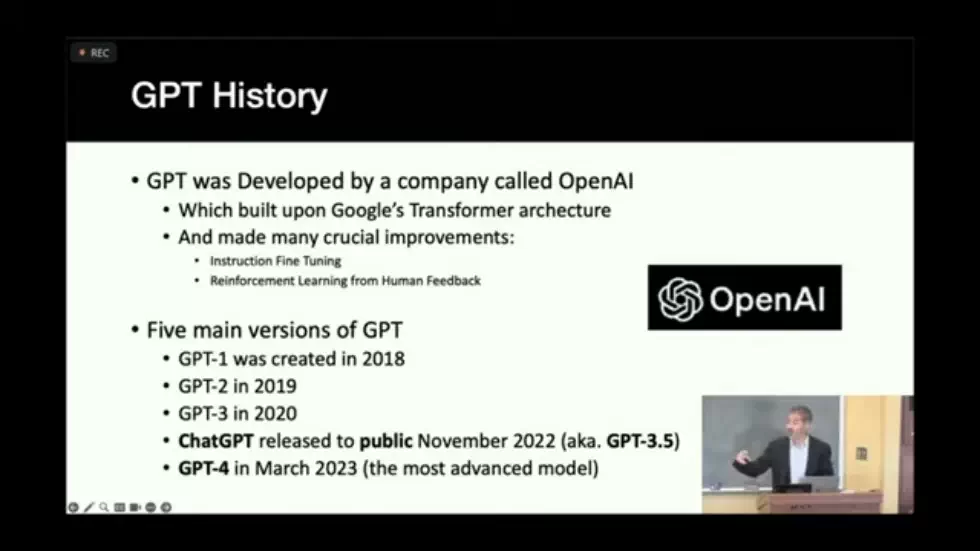

History of GPT 09:43

- GPT was developed by OpenAI, a non-profit research standard that turned into a for-profit called OpenAI in the Bay Area.

- They built upon Google's Transformer architecture, made many huge improvements to get the state of the art where we are now.

- GPT-1 was released in 2018, GPT-2 in 2019, GPT-3 in 2020, and GPT-4 was released just last month.

- GPT-3 was the first one that could generate pretty lifelike text.

- However, it wasn't really useful AI.

- Chat GPT came in November 2020 and was a huge breakthrough based upon two of these improvements that OpenAI made called instruction to fine-tuning and reinforcement learning from human feedback.

OpenAI Playground 12:16

- The OpenAI Playground allows you to look at these old models, not like 2022 and play with them.

- They're kind of Frozen in Time and Time Machine of the way technology was just a year ago.

- When GPT-3 came out in 2020, it was pretty good, but they made a couple of improvements up through early 2022.

- However, they weren't really that good other than generating fake Twitter posts or things of that nature.

GPT-3 Advancements 13:28

- In November 2022, GPT-4 came out with huge advancements.

- It wasn't just its ability to generate funny poems but also its ability to engage in reasoning and problem solving.

- It was totally unexpected among AI researchers.

- It was an emergent property of this model.

- Another thing that was super shocking to most researchers is the ability to "understand" what was going on.

- There's no evidence that these models are actually thinking or sentient, but they're really good at responding.

- They're amazing at simulating, and we never had systems that could respond sensibly to arbitrary topics.

- Researchers wondered if GPT-4 could do stuff with law, and it turns out it can generate a first draft patent that could definitely pass.

Word Vectors and Word Embeddings 19:14

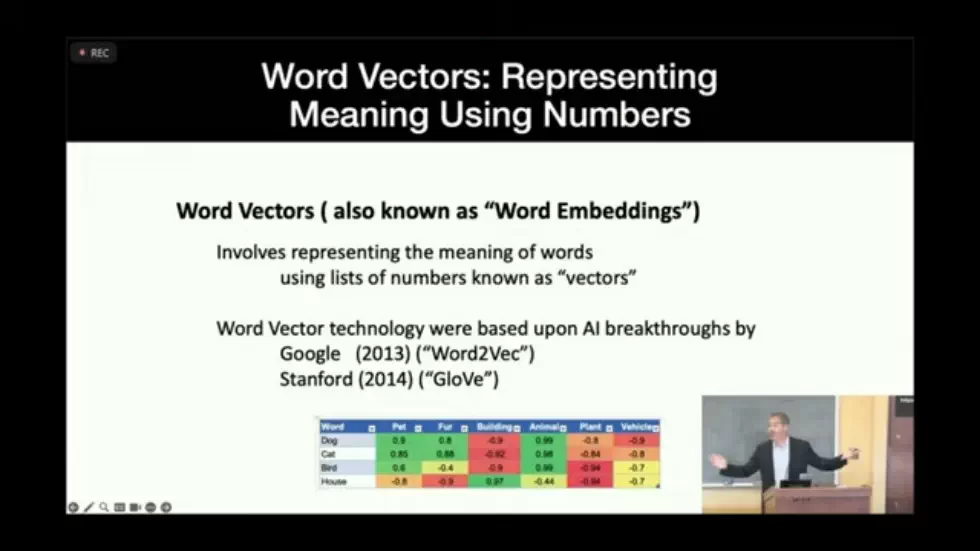

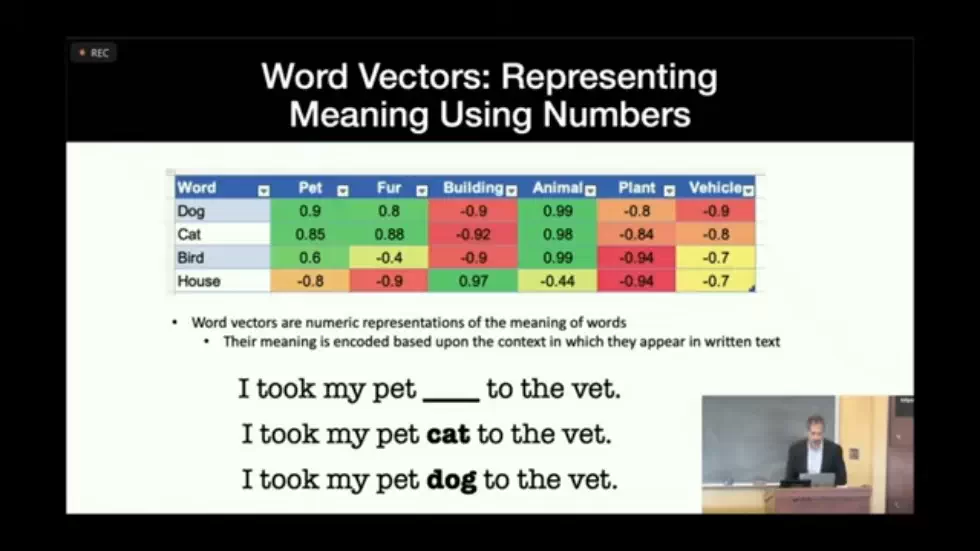

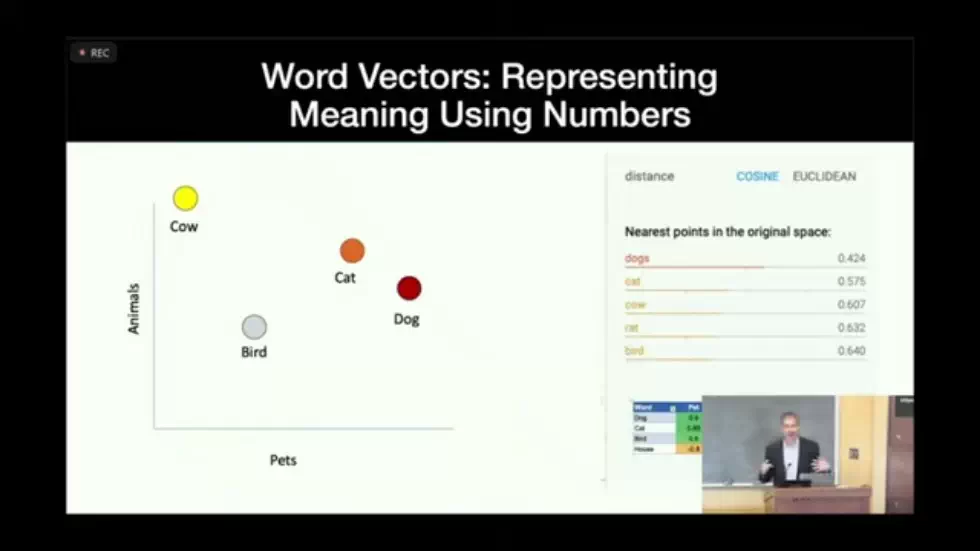

- Word vectors or embeddings are a breakthrough in AI that allows the encoding of the meaning of words as mathematical values.

- This idea was developed in 2012 by Google and Stanford.

- Word embeddings are lists of numerical values that represent different aspects of a word.

- The values are like categories that any possible thing could be put into.

- The values range from -1 to 1, and the higher the value, the more closely associated the word is with a particular category.

- For example, "dog" is strongly associated with the category "pet" and "animal," as well as being associated with "fur" and not being associated with "building".

- The embeddings are learned by the model by reading texts and learning the associations between words and the categories they belong to.

- The model is trained on billions of text examples to learn the mathematical similarities between words used together.

GPT/ChatGPT's Evolution 15:55

- In the early 2020s, GPT/ChatGPT was able to generate a first draft of almost any legal document, including contracts.

- The generated drafts were good enough to be considered an okay first draft by a first-year associate.

- As the technology evolved, GPT/ChatGPT has become capable of generating more complex documents and even has a theory of mind.

- The latest version of GPT/ChatGPT can follow things from different rooms and has the ability to reason about them.

- This breakthrough in AI is one of the biggest in the last 20 years.

- In the video, Professor Harry Surden uses the example of the Apple question to demonstrate how the latest version of GPT/ChatGPT can handle reasoning and state-of-the-world reasoning abilities.

GPT/ChatGPT's Capabilities 17:30

- GPT/ChatGPT is capable of generating text and making predictions based on the text it has learned.

- The model can also reason about the world and make predictions about what might happen next.

- It can be trained to perform a wide variety of tasks, including language translation, summarization, and even image captioning.

- GPT/ChatGPT can also be fine-tuned to perform specific tasks, such as generating product descriptions or writing news articles.

- It can also be used to generate chatbot responses or answer questions.

Limitations of GPT/ChatGPT 16:54

- While GPT/ChatGPT is capable of generating high-quality text, it is not perfect and can make mistakes.

- The generated text should always be verified before being used in real-world scenarios.

- The model may generate text that is inappropriate or offensive, and it is up to the user to ensure that the generated text is suitable for their purposes.

- GPT/ChatGPT can generate biased text if the training data is biased, and it is important to ensure that the training data is diverse and representative of different perspectives.

Neural Networks and Deep Learning 24:21

- Neural networks learn patterns from data.

- Loosely inspired by the human brain.

- Neural networks are a flexible technique for encoding patterns.

- Any pattern can be encoded in a neural network.

- Deep learning is taking neural networks and scaling them up on lots of data.

- Deep learning emerged in 2012.

Word Vectors 24:11

- Word vectors represent words mathematically.

- They take words and represent them mathematically between what's around them.

- Word vectors are called word embeddings.

- Word vectors are a crucial breakthrough that's allowed GPT.

GPT 3 27:22

- GPT 3 used neural networks and word vectors to generate text.

- It ran on the whole internet and two million books to learn.

- GPT 3 was developed using deep learning.

- GPT 3 is a problem-solving machine.

How GPT Works 27:36

- GPT 3 reads every sentence and tries to predict the next word.

- If it predicts the right word, it gets a thumbs up.

- If it predicts the wrong word, it's corrected.

- Corrections involve demoting the people who sent them down the wrong path and promoting those who sent them down the right path.

- Corrections improve the accuracy of predictions.

Example 28:29

- The example shows the prediction process in action.

- The neural network predicts the wrong word.

- The correct answer is compared to what was predicted.

- Corrections involve demoting and promoting neural network pathways.

- The next time the neural network will send data down a different path.

- The process is repeated until the neural network predicts the right answer.

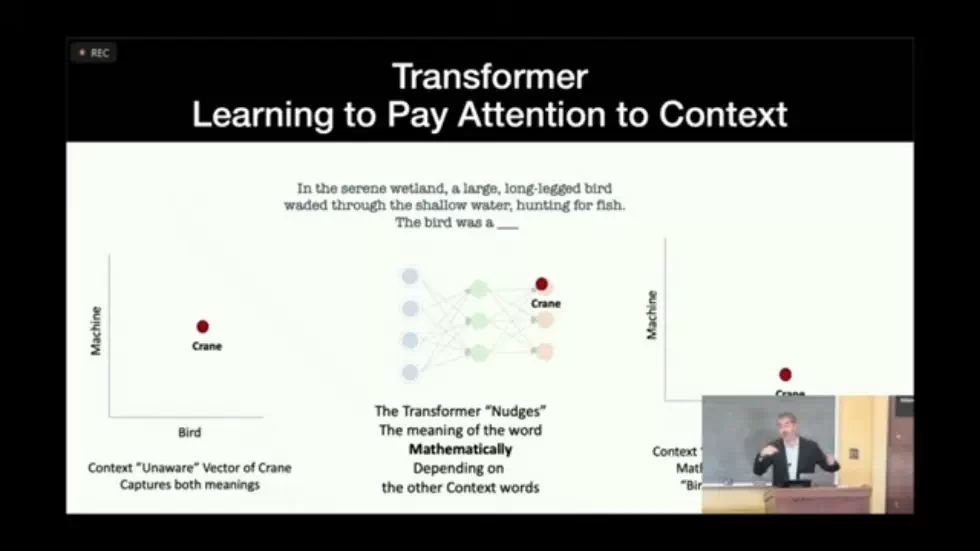

Training and the Transformer 29:48

- The process of training GPT involves initially randomizing billions of parameters and then nudging them up and down billions of times to teach it the English language and reasoning.

- This process would not have been possible without the mathematics of the Transformer, which was Google's invention.

- The Transformer takes advantage of the fact that words can be represented as vectors or numbers.

- It nudges the meaning of a word towards one meaning or the other based on the context of the sentence.

- This is accomplished by nudging it mathematically towards a space that encodes the context of what's being passed plus what it said.

- The output vector represents the meaning of the word in the given context.

Transfer Learning 33:45

- Transfer learning is a technique where a model is trained for a broad range of tasks rather than a single narrow task.

- GPT-3 was trained on the entire textual output of the internet compressed in a neural network of 175 billion parameters.

- OpenAI believed that the capability to do almost anything was lurking in GPT-3 and they just needed to pull it out by clever engineering.

- The researchers at OpenAI used a technique called instruction fine-tuning to give the model thousands of examples of good questions and answers.

- The model uses these examples to learn how to provide more accurate answers.

Nudging the Model 35:21

- Even though GPT-3 seemed dumb initially, researchers at OpenAI believed it had the capability to do almost anything.

- They used a technique called instruction fine-tuning to give the model thousands of examples of good questions and answers.

- The model uses these examples to learn how to provide more accurate answers.

- This technique is a way of nudging the model into ChatGPT by teaching it how to answer questions more accurately.

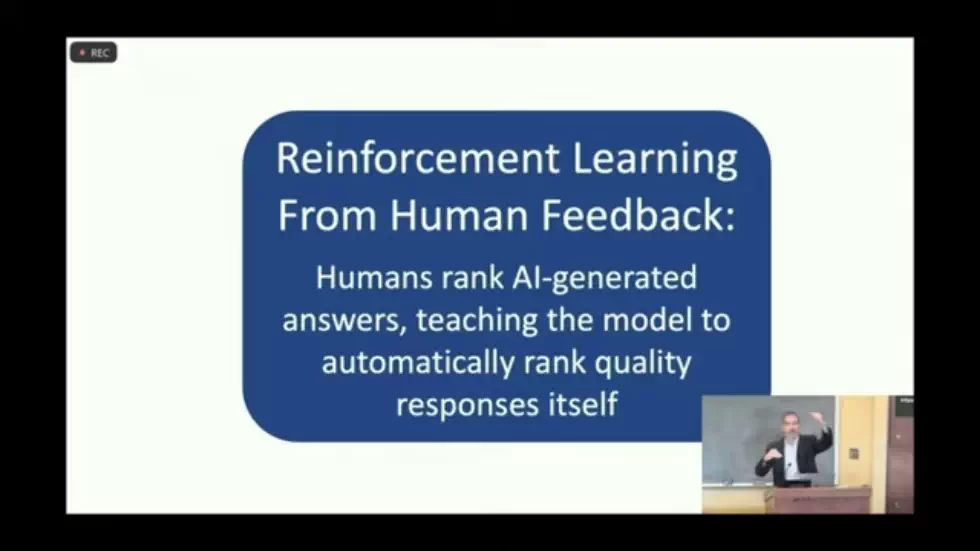

Training GPT models 36:42

- GPT models are trained to become good at writing poems and other things.

- Researchers get people to rank the five best outputs produced by the model.

- The ranking is based on a scale of one to five, with one being the best and five being the worst.

- The scores from the rankings are used to train another AI system called a reward model.

- The reward model learns what good output looks like, and this knowledge is used to improve the GPT model.

ChatGPT 37:31

- The GPT models produced by the above process are used to produce ChatGPT.

- ChatGPT is an AI system that can produce human-like responses to text inputs.

- It was created by training a GPT model with a billion examples of good and bad output.

GPT4 37:49

- GPT4 is the latest version of GPT.

- It is a much better version than ChatGPT.

The limitations of GPT models 37:55

- GPT models are not perfect and make mistakes.

- These mistakes are called hallucinations.

- The author believes that there is a path forward for reducing these mistakes.

- However, it is important to double-check the model's outputs.

The impact of GPT models on society 38:16

- The author believes that GPT models are amazing and represent the biggest breakthrough he has seen in his 20 years of studying AI.

- GPT models have the potential to accelerate research and create new knowledge.

- However, the author also believes that GPT models will be disruptive for jobs and society.

- He does not believe that AI will take over the world, but he does think that it will have a significant impact on society.

Read also: